Data merge sas deleting records code#

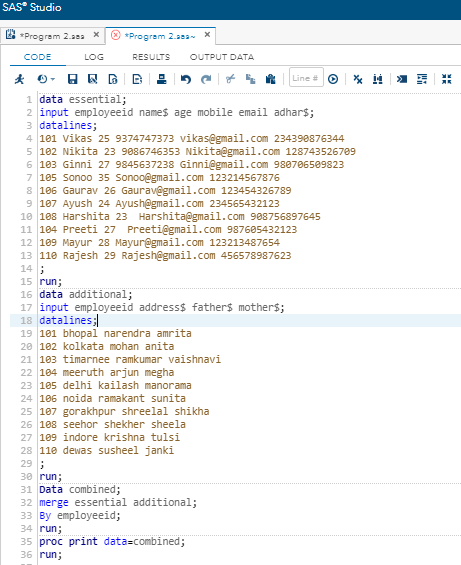

However, the code you provided output want data with 0 observations. *Clear the contents of hash freeing memory space after processing each id*/ĭrop sort nodupkey by ID CODES AND DATE halved the data size. *Reading your small and look uo the large*/ *Reading your large by id i.e only one id at a time and load *The following requires a sorted approach hence the proc sort by id*/ Proc sort data=sashelp.class out=small(keep=sex) nodupkey *Creating small with unique Id's as you mentioned 1:N*/ The following assumed both datasets have the same ID's. I was about to recommend the below(that clears memory) with confidence but now recommending without confidence If 0 then set z.data_large(keep=id var1 var2) ĭcl h1 (dataset:'z.data_large(keep=id var1 var2 where=(var1 ne.

You will need to talk to your SAS admin if you require more memory than allowed to you for a SAS session. You can get information about memory available via Proc Options group=memory run You can estimate the memory requirement by summing up the lengths of the 3 variables loaded into the hash multiplied by the number of rows in your table (with none missing var1). ) helps to keep the total rows below this 22M+ limit - something I should have added to the code from start. May be also excluding all rows where=(var1 ne. That's nothing we can fix unless you get more memory for your session. A SAS Hash table resides in memory and it appears that the load into memory failed after 22M rows. NOTE: DATA statement used (Total process time):Ĭpu time 19.79 Hash object added 22020080 items when memory failure occurred WARNING: Data set K.MERGED was not replaced because this step was stopped. WARNING: The data set K.MERGED may be incomplete. Aborted during the EXECUTION phase.ĮRROR: The SAS System stopped processing this step because of insufficient memory.

NOTE: Data file R.data_large.DATA is in a format that is native to another host, or the file encoding doesĮRROR: Hash object added 22020080 items when memory failure occurred.įATAL: Insufficient memory to execute DATA step program. Require additional CPU resources and might reduce performance.ģ57 dcl hash h1 (dataset:'R.DX(keep=id var1 var2)',multidata:'y')

Cross Environment Data Access will be used, which might NOTE: Data file R.data_large is in a format that is native to another host, or the file encoding does I'll post the sample data soon.ģ56 if 0 then set R.data_large(keep=id var1 var2) Code got SAS running but gave me an error below when I used latest cut from Patrick adding hash in DCL statement as novinosirin suggested.

0 kommentar(er)

0 kommentar(er)